好久没更新了,这次借国科大深度学习作业来更新一下,挺有意思的。

1 模型训练

1.1 图像数据获取

要想做自己的目标检测,得找相关的数据集,可以自己采集和标注,也可以找开源的,这里我找了个开源的CS2警匪标注数据。

CS2警匪标注数据Yolov8-CS2-detection/data at main · ZeeChono/Yolov8-CS2-detection

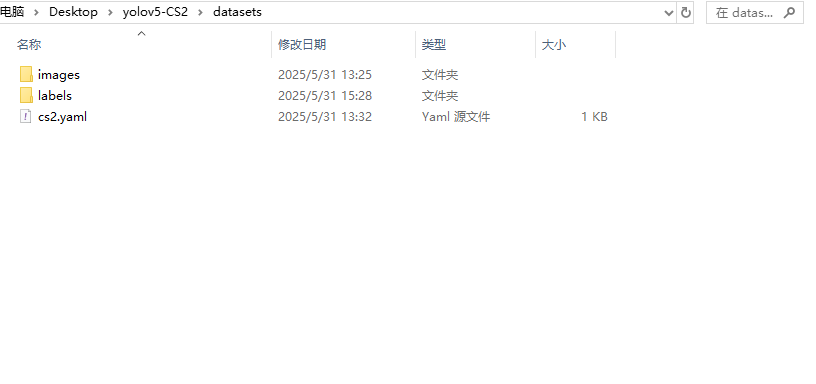

新建一个数据集文件夹dataset,把images和labels放到dataset里。

1.2 配置训练参数

数据集配置文件cs2.yaml

- 修改path、train、val、test的目录路径为自定义数据集路径

- 类别为CT、T、CTh、Th

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# COCO128 dataset https://www.kaggle.com/datasets/ultralytics/coco128 (first 128 images from COCO train2017) by Ultralytics

# Example usage: python train.py --data coco128.yaml

# parent

# ├── yolov5

# └── datasets

# └── coco128 ← downloads here (7 MB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: ./datasets # dataset root dir

train: images/train # train images (relative to 'path') 128 images

val: images/val # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes

names:

0: CT

1: T

2: CTh

3: Th

# Download script/URL (optional)

#download: https://github.com/ultralytics/assets/releases/download/v0.0.0/coco128.zip模型配置文件yolov5m_CS2.yaml

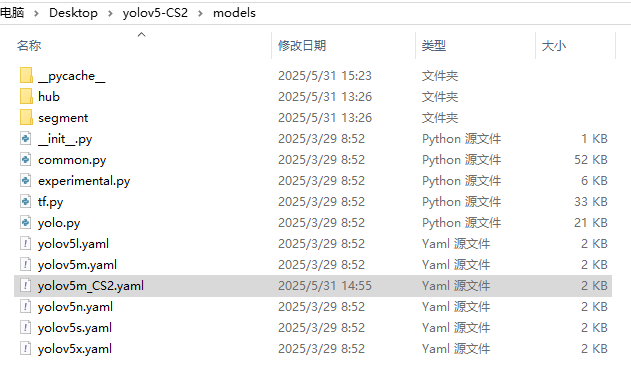

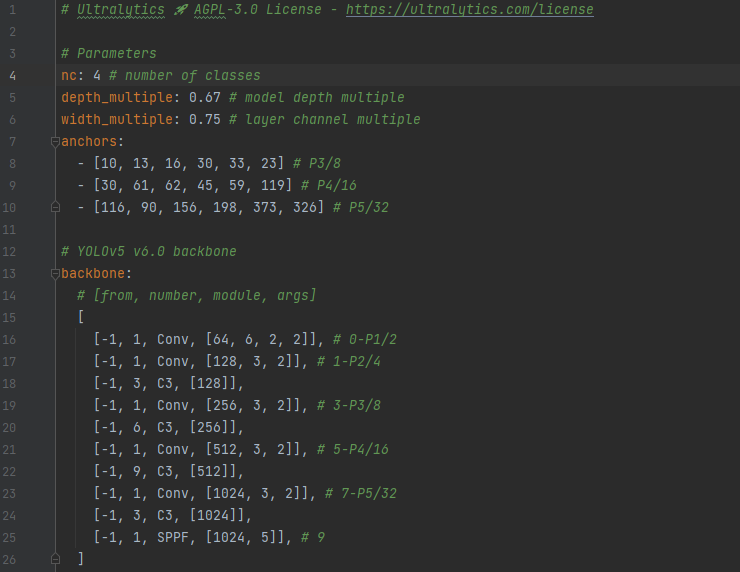

在models目录下,拷贝yolov5m.yaml为自定义,修改

- nc改为4

1.3 训练

python train.py --cfg models/yolov5m_CS2.yaml --data datasets/cs2.yaml --weights yolov5m6.pt --epoch 150 --batch-size 32我用的北京超算云平台,pytorch环境配置

# 创建虚拟环境

conda create -n py39 python=3.9

# 激活/退出

conda activate text

conda deactivate text

# 设置源

pip config set global.index-url https://mirrors.aliyun.com/pypi/simple/

# 下载依赖包

pip install -r requirements.txt任务脚本run.sh

#!/bin/bash

module load anaconda/2020.11

module load cuda/11.7

source activate py39dlywh

python train.py --cfg models/yolov5m_CS2.yaml --data datasets/cs2.yaml --weights yolov5m6.pt --epoch 150 --batch-size 32提交任务

sbatch --gpus=1 ./run.sh查看日志

tail -f slurm-[id].out

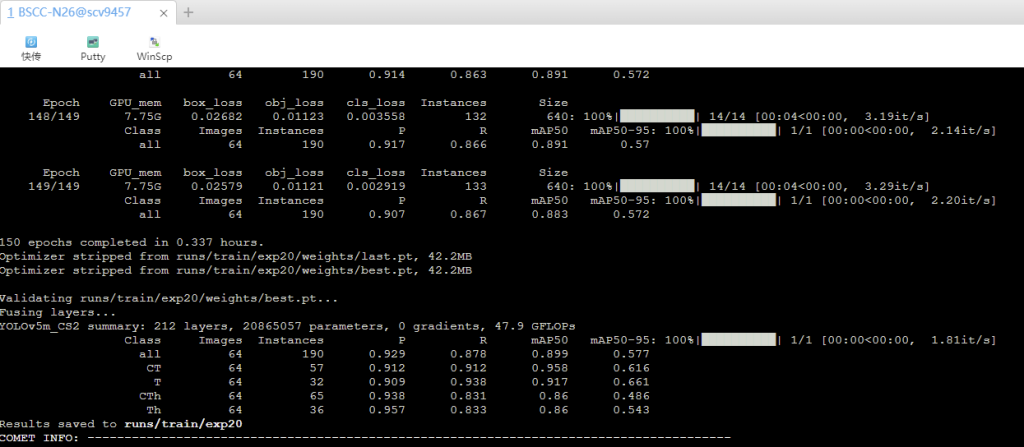

训练结果

run/train/exp最后一个,最好的模型权重weight/best.pt

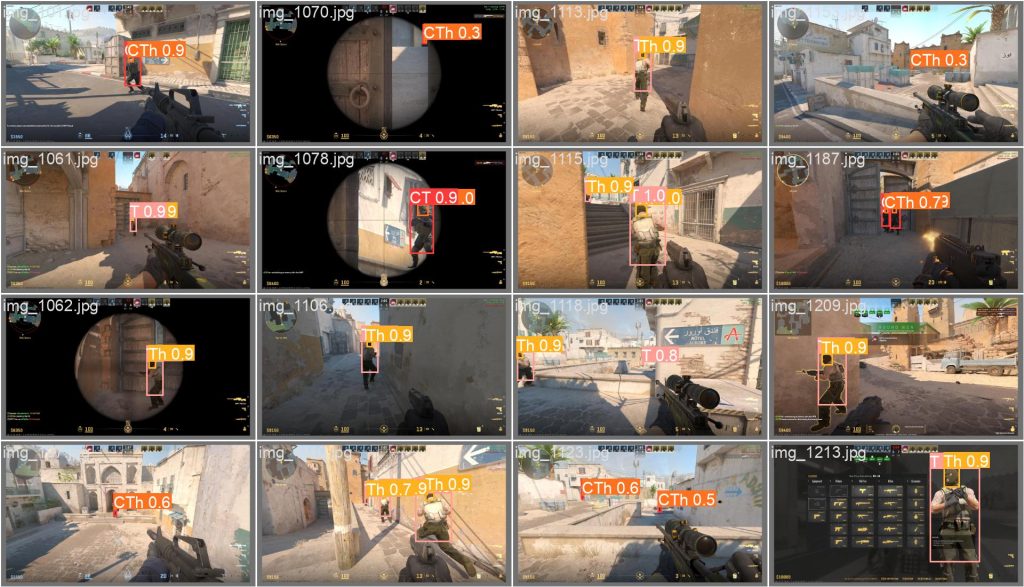

验证集效果

2 屏幕实时检测

2.1 思路

- 使用 mss 实时抓屏

- 使用 YOLOv5 做目标检测

- 使用 PyQt5 创建一个透明全屏窗口(无边框、置顶、不干扰鼠标)

- 每帧更新绘图(检测框和标签),实现自动刷新、不残留

# screendetect.py

import sys

import cv2

import torch

import numpy as np

import mss

import time

import pathlib

temp = pathlib.PosixPath

pathlib.PosixPath = pathlib.WindowsPath

from PyQt5.QtWidgets import QApplication, QWidget

from PyQt5.QtGui import QPainter, QColor, QPen, QFont

from PyQt5.QtCore import Qt, QTimer

# === 模型路径 ===

MODEL_PATH = 'runs/train/exp20/weights/best.pt'

# === YOLOv5 设置 ===

from models.common import DetectMultiBackend

from utils.general import non_max_suppression, scale_boxes

from utils.torch_utils import select_device

from utils.augmentations import letterbox

# === 初始化模型 ===

device = select_device('')

model = DetectMultiBackend(MODEL_PATH, device=device)

stride, names = model.stride, model.names

imgsz = (640, 640)

# === 屏幕区域 ===

monitor = {"top": 0, "left": 0, "width": 1920, "height": 1080}

sct = mss.mss()

# === 检测框数据 ===

detections = [] # 每帧更新

# === PyQt5 覆盖窗口类 ===

class Overlay(QWidget):

def __init__(self):

super().__init__()

self.setWindowTitle('Screen Overlay')

self.setWindowFlags(Qt.WindowStaysOnTopHint | Qt.FramelessWindowHint | Qt.Tool)

self.setAttribute(Qt.WA_TranslucentBackground)

self.setAttribute(Qt.WA_TransparentForMouseEvents) # 鼠标穿透

screen_geometry = QApplication.desktop().screenGeometry()

self.setGeometry(screen_geometry)

# 定时器:每 30ms 更新一次

self.timer = QTimer()

self.timer.timeout.connect(self.update_overlay)

self.timer.start(30)

def update_overlay(self):

self.update() # 触发 paintEvent 重绘

def paintEvent(self, event):

painter = QPainter(self)

painter.setRenderHint(QPainter.Antialiasing)

# 设置字体

font = QFont()

font.setPointSize(10)

painter.setFont(font)

# 绘制所有检测框

for det in detections:

x1, y1, x2, y2, label = det

pen = QPen(QColor(0, 255, 0), 2)

painter.setPen(pen)

painter.drawRect(x1, y1, x2 - x1, y2 - y1)

painter.drawText(x1, y1 - 5, label)

painter.end()

# === 后台检测循环 ===

def detect_loop():

global detections

while True:

screenshot = np.array(sct.grab(monitor))[:, :, :3]

im0 = np.ascontiguousarray(screenshot)

img = letterbox(im0, imgsz, stride=stride, auto=True)[0]

img = img.transpose((2, 0, 1)) # HWC to CHW

img = np.ascontiguousarray(img)

img = torch.from_numpy(img).to(device)

img = img.float() / 255.0

if img.ndimension() == 3:

img = img.unsqueeze(0)

pred = model(img, augment=False, visualize=False)

pred = non_max_suppression(pred, conf_thres=0.4, iou_thres=0.45)

new_detections = []

for det in pred:

if len(det):

det[:, :4] = scale_boxes(img.shape[2:], det[:, :4], im0.shape).round()

for *xyxy, conf, cls in det:

label = f'{names[int(cls)]} {conf:.2f}'

x1, y1, x2, y2 = map(int, xyxy)

new_detections.append((x1, y1, x2, y2, label))

detections = new_detections

time.sleep(0.03) # 降低 CPU 占用

# === 主入口 ===

if __name__ == '__main__':

from threading import Thread

# 后台启动检测线程

thread = Thread(target=detect_loop, daemon=True)

thread.start()

# 启动 PyQt5 覆盖窗口

app = QApplication(sys.argv)

overlay = Overlay()

overlay.show()

sys.exit(app.exec_())

2.2 效果

声明:仅可用于个人学习、研究、离线演示等合法场景,不能用于破坏游戏公平性。

Comments NOTHING